June 22, 2007

OpenLearn Unit Link Map

My feedreader seemed to be full this morning with posts about wikimindmap, which produces a mindmap view of Wikipedia content.

At first I thought this was doing something really clever, but a tiny bit of digging suggests they're just link scraping (and exploiting a bit of structure - like "See also" information) and then using a (really neat) third party tool - the Flash Browser for FreeMind mind maps - to display the results:

One thing I will take away from the WikiMindMap, though, is the way it provides a really "gentle" navigational display for the links contained on a page (and one I may reuse in a searchfeedr context).

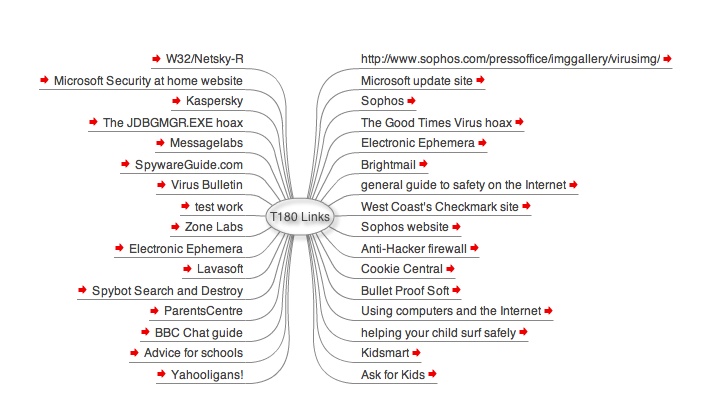

After a quick "View Source", I just had to knock up a demo link viewer for the links in an OpenLearn Unit, slightly modifying the OpenLearn XML Unit link scraper that I built some time ago.

Anyway - here's a demo of the links contained in T180_8: OpenLearn Unit link mapper demo:

Some basic link scraping XSL generates a crude FreeMind XML file which is then passed to the flash FreeMind viewer.

If I get a chance over the weekend, I'll generalise the viewer and add it to the Towards an OpenLearn XML (Re)Publishing System, providing a map view to arbitrary OpenLearn Units (or at least the ones for which I have URL powered access to the XML...).

I also need to have a think about putting a bit of structure into the links view (maybe extracting links by section [UPDATE: demoed here - Mapping OpenLearn XML, Pass 2], and maybe even trying to use something like the Yahoo content analyser to add a few relevant tags) and exploring how I might add media assets scraped from OpenLearn Units into the map view.

Posted by ajh59 at June 22, 2007 12:22 PMErm:

http://scienceoftheinvisible.blogspot.com/2007/06/holiday-destination.html

!

Posted by: AJ Cann at June 22, 2007 01:51 PMAll I want to find is a program that will : Create a tree map of saved HTMLs of wikipedia web pages. -------- That would be better then anything you have on your blog page------------ Amen

Posted by: John at November 7, 2007 02:32 AM